CrossEntropyLoss¶

-

class

getml.models.loss_functions.CrossEntropyLoss¶ Bases:

getml.models.loss_functions._LossFunctionCross entropy loss

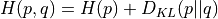

The cross entropy between two probability distributions

and

and  is a combination of the information

contained in

is a combination of the information

contained in  and the additional information stored in

and the additional information stored in

with respect to

with respect to  . In technical terms: it

is the entropy of

. In technical terms: it

is the entropy of  plus the Kullback-Leibler

divergence - a distance in probability space - from

plus the Kullback-Leibler

divergence - a distance in probability space - from  to

to  .

.

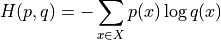

For discrete probability distributions the cross entropy loss can be calculated by

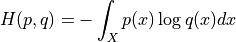

and for continuous probability distributions by

with

being the support of the samples and

being the support of the samples and  and

and  being two discrete or continuous probability

distributions over

being two discrete or continuous probability

distributions over  .

.Note

Recommended loss function for classification problems.